IRT and the Rasch Model

Posted onI am inspired by the blog post completed by the team at stitchfix (see details here) regarding using item response theory for “latent size.” It is a neat approach. I need to say upfront that I am not a trained psychometrician, psychologist, etc. I understand the statistics, but there are nuances of which I know I am ignorant. However, I know that these methods are certainly worthwhile and worth pursuing.

Similarly, I work with a lot of survey data. Classical test theory has some issues and having a robust construct validated through item response theory would be a good way to develop a more stable construct. Psychology/ sociological constructs are hard. This kind of research is just difficult with small signals and lots of noise in all of the measures. For this reason complicated methods are needed (generally speaking). This is why Bayesian methods and hierarchical modeling approaches are coming to vogue in the social sciences.

Anyways, back to IRT.

I am going to load the mirt and ltm packages for use here (Chalmers

2012), (Rizopoulos 2006). The primary data set

with Science. Here a preview of the data in the dataset of 392

respondents and 4 items.

%>% knitr::

| Comfort | Work | Future | Benefit |

|---|---|---|---|

| 4 | 4 | 3 | 2 |

| 3 | 3 | 3 | 3 |

| 3 | 2 | 2 | 3 |

| 3 | 2 | 2 | 3 |

| 3 | 4 | 4 | 1 |

| 4 | 4 | 3 | 3 |

Classical Methods

In a clasical methodology we could do some factor analysis and see how

many latent variables we have in the items. I’ll use the n_factors

function from the psycho package. This function applies ten different

factor methods and then shows how many of the methods support a given

number of factors.

efa <-Science %>%

%>%

qgraph:: %>%

nFactors::

efa$Analysis

Eigenvalues Prop Cumu Par.Analysis Pred.eig OC Acc.factor

1 2.0188352 0.5047088 0.5047088 1 1.1236282 (< OC) NA

2 0.9088057 0.2272014 0.7319102 1 0.7072366 0.7944225

3 0.5931986 0.1482996 0.8802099 1 NA 0.2015691

4 0.4791606 0.1197901 1.0000000 1 NA NA

AF

1 (< AF)

2

3

4

Here the plurality of the methods shows that there is one factor in the items with the plurality of the votes (supported by 8/10 methods).

We could also look at Cronbach’s or tau equivalence reliability. This metric is subject to the number of items and the average item intercorrelation so it can be “cheated” but it is useful to perform.

a<-Science %>%

%>%

psych::

a$total %>% knitr::

| raw_alpha | std.alpha | G6(smc) | average_r | S/N | ase | mean | sd | median_r | |

|---|---|---|---|---|---|---|---|---|---|

| 0.597724 | 0.6029445 | 0.5514024 | 0.2751706 | 1.51854 | 0.0328745 | 2.917092 | 0.5007807 | 0.2987043 |

So alpha isn’t great. Ideally this value is > 0.7.

IRT

Now let’s go to IRT! For this instance there isn’t a correct answer per say like on the SAT. That being said a correct answer is viewed as the most difficult item in regard to the latent trait, typically one end of the scale (strong agree or disagree or frequent).

So now using a graded response model:

lmod <- ltm::

Call:

ltm::grm(data = Science, IRT.param = FALSE)

Model Summary:

log.Lik AIC BIC

-1608.871 3249.742 3313.282

Coefficients:

$Comfort

value

beta.1 -4.863

beta.2 -2.639

beta.3 1.466

beta 1.041

$Work

value

beta.1 -2.924

beta.2 -0.901

beta.3 2.267

beta 1.226

$Future

value

beta.1 -5.243

beta.2 -2.217

beta.3 1.967

beta 2.299

$Benefit

value

beta.1 -3.347

beta.2 -0.991

beta.3 1.688

beta 1.094

Integration:

method: Gauss-Hermite

quadrature points: 21

Optimization:

Convergence: 0

max(|grad|): 0.0092

quasi-Newton: BFGS

Equivalently, you can do this in mirt.

(mmod <- )

Call:

mirt(data = Science, model = 1, verbose = FALSE)

Full-information item factor analysis with 1 factor(s).

Converged within 1e-04 tolerance after 36 EM iterations.

mirt version: 1.38.1

M-step optimizer: BFGS

EM acceleration: Ramsay

Number of rectangular quadrature: 61

Latent density type: Gaussian

Log-likelihood = -1608.87

Estimated parameters: 16

AIC = 3249.739

BIC = 3313.279; SABIC = 3262.512

G2 (239) = 213.56, p = 0.8804

RMSEA = 0, CFI = NaN, TLI = NaN

Now we can just look at the items and get a feel for the difficulty.

$items

a1 d1 d2 d3

Comfort 1.041755 4.864154 2.6399417 -1.466013

Work 1.225962 2.924003 0.9011651 -2.266565

Future 2.293372 5.233993 2.2137728 -1.963706

Benefit 1.094915 3.347920 0.9916289 -1.688260

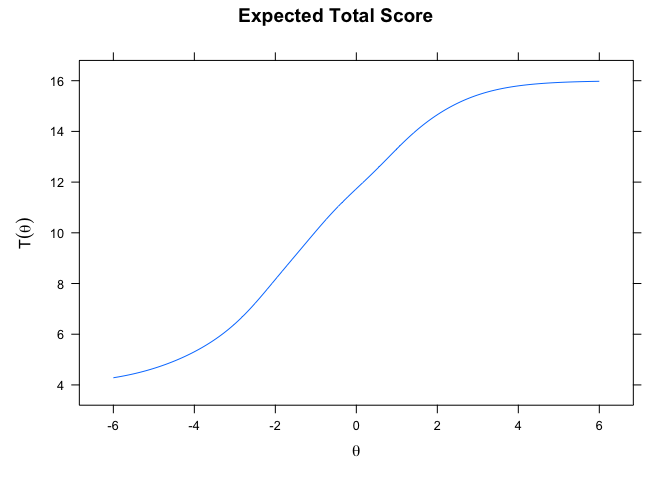

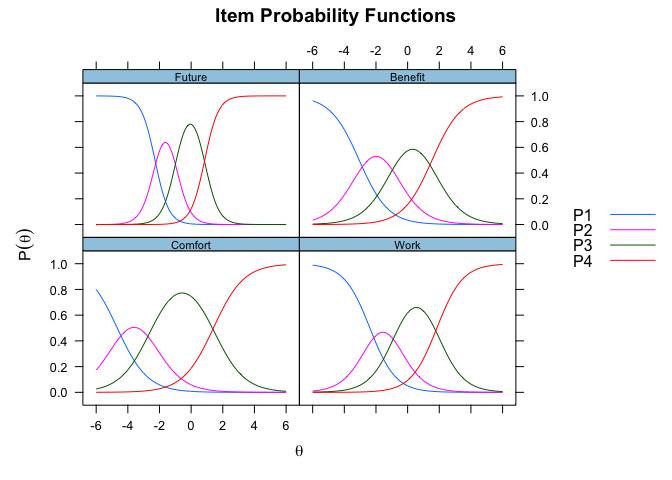

Now the really cool thing is to look at the item characteristic curves.

And the individual ICCs…

I hope to go into detail soon, but what these plots allow us to see if the individual item difficulty and the items ability to discrinimate a latent trait. This means how well can it separate out the latent factor from each item. And all this to say, once the items have been validated you can easily score the participants for the presence of the latent factor (yahoo!).

%>%

%>%

%>%

%>%

knitr::

| subject | F1 |

|---|---|

| 1 | 0.4015613 |

| 2 | 0.0520324 |

| 3 | -0.8906436 |

| 4 | -0.8906436 |

| 5 | 0.7653806 |

| 6 | 0.6695350 |

Chalmers, R. Philip. 2012. “mirt: A Multidimensional Item Response Theory Package for the R Environment.” Journal of Statistical Software 48 (6): 1–29. https://doi.org/10.18637/jss.v048.i06.

Rizopoulos, Dimitris. 2006. “Ltm: An r Package for Latent Variable Modelling and Item Response Theory Analyses.” Journal of Statistical Software 17 (5): 1–25. http://www.jstatsoft.org/v17/i05/.

Citation

BibTex citation:

@online{dewitt2018

author = {Michael E. DeWitt},

title = {IRT and the Rasch Model},

date = 2018-07-11,

url = {https://michaeldewittjr.com/articles/2018-07-11-irt-and-the-rasch-model},

langid = {en}

}

For attribution, please cite this work as:

Michael E. DeWitt. 2018. "IRT and the Rasch Model." July 11, 2018. https://michaeldewittjr.com/articles/2018-07-11-irt-and-the-rasch-model