How Discerning is the Technical Challenge in GBBO?

Posted onMy wife and I love to watch the Great British Bake Off on Netflix. The competition is for the most part collegial in general and all around feel good television, especially at night. After watching several seasons of the show, a lingering question came to mind: how good are the judges at estimating talent?

The format of the show is composed of three rounds; the first and third rounds have themes/genre of baked good that the contestants knew about in advance and could decide (and practice) what they wanted to make. The second/middle round is composed of a “technical” challenge where the bakers have all given the same ingrediants and instructions and asked to make something of which they had no prior knowledge. Unlike the other rounds, the judges judge each bake blindly in the technical (and of course the contestants make the same dish).

Enter the Pyschometrics

This set-up is perfect for understanding how well the judges can estimate “ability” to use a psychometric term. Because we have contestants facing the same exact challenge and being judged blindly, we can use pyschometric tools to judge the “ability” of the baker and the “difficulty” of the challenge. There is a lot of noise on these measures due to the fact that contestants are eliminated after each show meaning that they do not get a chance at each challenge, but it will give a little bit of insight the ability of the bakers. We can then compare the outcomes of each round with the judged “technical” ability.

IRT and CRM

High stakes tests like the GRE and GMAT use something called Item Response Theory (IRT) to measure “ability.” The tests work by matching item difficulty (or how hard a question is) to the test-taker’s latent ability (tendency to get the right answer). Test takers should get the correct answer for those items where their ability is greater than the item difficulty, should get those items wrong where the difficulty is greater than their ability, and some distribution due to the measurement error in both ability and difficulty.

Not mentioned here, but a random component for guessing can be added. The literature for IRT is immense and there are many different models (2PL,3PL, Rasch, among others).

The key contribution of IRT over classical test theory (in my opinion) is that there is some latent noise in the test question/item.

IRT typically required a single “correct” answer. When we are looking at the rating of Bakers from 1 to N bakers, we need to observe the continuous data of the data. Enter the Continuous Response Models which allow us to use the principles of IRT for continuous data. In particular we will use Samejima’s continuous response model for the ranking of contestants.

Analysis Plan

So now we can lay out our analysis plan:

- Get the baking results

- Run the CRM to understand and rank baker ability

- Compare the modelled ability to the actual results

Getting the Data

The first part in this analysis is getting the data. Luckily, Wikipedia, the grandest resource on the interweb, provides these data in a regular pattern.

First, we will load the usual suite of packages for webscraping and analysis.

To run an initial test, I am just going to pull Season 3.

url <- "https://en.m.wikipedia.org/wiki/The_Great_British_Bake_Off_(series_3)"

content <-

tables <- content %>%

We can see that there are 18 available. The second table gives us the biographies of the contestants:

knitr::

| Baker | Age | Occupation | Hometown | Links |

|---|---|---|---|---|

| Brendan Lynch | 63 | Recruitment consultant | Sutton Coldfield | [4] |

| Cathryn Dresser | 27 | Shop assistant | Pease Pottage, West Sussex | [5] |

| Danny Bryden | 45 | Intensive care consultant | Sheffield | [6] |

| James Morton | 21 | Medical student | Hillswick, Shetland Islands | [7] |

| John Whaite | 22 | Law student | Wigan | [8] |

| Manisha Parmar | 27 | Nursery nurse | Leicester | |

| Natasha Stringer | 36 | Midwife | Tamworth, Staffordshire | |

| Peter Maloney | 43 | Sales manager | Windsor, Berkshire | |

| Ryan Chong | 38 | Photographer | Bristol | [9] |

| Sarah-Jane Willis | 28 | Vicar’s wife | Bewbush, West Sussex | [5] |

| Stuart Marston-Smith | 26 | PE teacher | Lichfield, Staffordshire | [10] |

| Victoria Chester | 50 | CEO of the charity Plantlife | Somerset | [11] |

With a little bit of work, we can turn the third table into a nice representation of the results.

tables %>%

%>%

. %>%

%>%

%>%

. %>%

%>%

->performance

knitr::

| baker | variable | value | round_num | perf |

|---|---|---|---|---|

| Brendan | 1 | SAFE | 1 | SAFE 1 |

| James | 1 | SAFE | 1 | SAFE 1 |

| Danny | 1 | SAFE | 1 | SAFE 1 |

| Cathryn | 1 | HIGH | 1 | HIGH 1 |

| Ryan | 1 | SAFE | 1 | SAFE 1 |

| Sarah-Jane | 1 | SAFE | 1 | SAFE 1 |

| Manisha | 1 | SAFE | 1 | SAFE 1 |

| Stuart | 1 | LOW | 1 | LOW 1 |

| Peter | 1 | SAFE | 1 | SAFE 1 |

| Natasha | 1 | OUT | 1 | OUT 1 |

| Brendan | 2 | HIGH | 2 | HIGH 2 |

| James | 2 | HIGH | 2 | HIGH 2 |

| Danny | 2 | SAFE | 2 | SAFE 2 |

| Cathryn | 2 | SAFE | 2 | SAFE 2 |

| Ryan | 2 | SAFE | 2 | SAFE 2 |

| Sarah-Jane | 2 | SAFE | 2 | SAFE 2 |

| Manisha | 2 | SAFE | 2 | SAFE 2 |

| Stuart | 2 | LOW | 2 | LOW 2 |

| Victoria | 2 | LOW | 2 | LOW 2 |

| Peter | 2 | OUT | 2 | OUT 2 |

| Brendan | 3 | LOW | 3 | LOW 3 |

| Danny | 3 | SAFE | 3 | SAFE 3 |

| Cathryn | 3 | SAFE | 3 | SAFE 3 |

| Ryan | 3 | SAFE | 3 | SAFE 3 |

| Sarah-Jane | 3 | SAFE | 3 | SAFE 3 |

| Manisha | 3 | SAFE | 3 | SAFE 3 |

| Stuart | 3 | HIGH | 3 | HIGH 3 |

| Victoria | 3 | OUT | 3 | OUT 3 |

| James | 4 | SAFE | 4 | SAFE 4 |

| Danny | 4 | HIGH | 4 | HIGH 4 |

| Cathryn | 4 | SAFE | 4 | SAFE 4 |

| Ryan | 4 | LOW | 4 | LOW 4 |

| Sarah-Jane | 4 | SAFE | 4 | SAFE 4 |

| Manisha | 4 | LOW | 4 | LOW 4 |

| Stuart | 4 | OUT | 4 | OUT 4 |

| Brendan | 5 | HIGH | 5 | HIGH 5 |

| James | 5 | SAFE | 5 | SAFE 5 |

| Danny | 5 | LOW | 5 | LOW 5 |

| Cathryn | 5 | SAFE | 5 | SAFE 5 |

| Sarah-Jane | 5 | LOW | 5 | LOW 5 |

| Manisha | 5 | OUT | 5 | OUT 5 |

| James | 6 | LOW | 6 | LOW 6 |

| Danny | 6 | HIGH | 6 | HIGH 6 |

| Cathryn | 6 | SAFE | 6 | SAFE 6 |

| Ryan | 6 | SAFE | 6 | SAFE 6 |

| Sarah-Jane | 6 | LOW | 6 | LOW 6 |

| Brendan | 7 | HIGH | 7 | HIGH 7 |

| James | 7 | LOW | 7 | LOW 7 |

| Cathryn | 7 | LOW | 7 | LOW 7 |

| Ryan | 7 | OUT | 7 | OUT 7 |

| Sarah-Jane | 7 | OUT | 7 | OUT 7 |

| Brendan | 8 | SAFE | 8 | SAFE 8 |

| Danny | 8 | LOW | 8 | LOW 8 |

| Cathryn | 8 | OUT | 8 | OUT 8 |

| Brendan | 9 | HIGH | 9 | HIGH 9 |

| Danny | 9 | OUT | 9 | OUT 9 |

| Brendan | 10 | Runner-up | 10 | Runner-up 10 |

| James | 10 | Runner-up | 10 | Runner-up 10 |

Now the more challenging part is to parse all of the results. I am going to use some loops and index variables because I can’t think of a more expediant way to do it.

Importantly, each baker will appear for as many challenges in which they participated. This means someone who was eliminated after the first show will only have one record (enter measurement error) and those who participated in later rounds will appear multiple times.

technicals <-

z <- 1

for(i in ){

x <- tables

interesting <-

if(<2){

next()

}

y <- x

<-

y$technical_no <- z

technicals <- y

z <- 1+z

}

out_long<-

knitr::

| baker | technical | technical_no |

|---|---|---|

| Brendan | 10th | 1 |

| Cathryn | 5th | 1 |

| Danny | 7th | 1 |

| James | 2nd | 1 |

| John | 11th | 1 |

| Manisha | 6th | 1 |

| Natasha | 12th | 1 |

| Peter | 3rd | 1 |

| Ryan | 8th | 1 |

| Sarah-Jane | 1st | 1 |

Now with a little nore parsing we can extract the result and associated rank of the bakers.

out_long

out_long

out_long

knitr::

| baker | technical | technical_no | rank | rank_ordered | baker_id |

|---|---|---|---|---|---|

| Brendan | 10th | 1 | 10 | 2 | 1 |

| Cathryn | 5th | 1 | 5 | 7 | 2 |

| Danny | 7th | 1 | 7 | 5 | 3 |

| James | 2nd | 1 | 2 | 10 | 4 |

| John | 11th | 1 | 11 | 1 | 5 |

| Manisha | 6th | 1 | 6 | 6 | 6 |

| Natasha | 12th | 1 | 12 | 0 | 7 |

| Peter | 3rd | 1 | 3 | 9 | 8 |

| Ryan | 8th | 1 | 8 | 4 | 9 |

| Sarah-Jane | 1st | 1 | 1 | 11 | 10 |

Modelling the Data

In completely transparency, I utilized code from https://cengiz.me/posts/crm-stan/ which provided an excellent starting point for the analysis.

The code is lightly modified (just to tighten some priors) because of the

// From https://cengiz.me/posts/crm-stan/

data{

int J; // number of items

int I; // number of individuals

int N; // number of observed responses

array [N] int item; // item id

array[N] int id; // person id

array[N] real Y; // vector of transformed outcome

}

parameters {

vector[J] b; // vector of b parameters forJ items

real mu_b; // mean of the b parameters

real<lower=0> sigma_b; // standard dev. of the b parameters

vector<lower=0>[J] a; // vector of a parameters for J items

real mu_a; // mean of the a parameters

real<lower=0> sigma_a; // standard deviation of the a parameters

vector<lower=0>[J] alpha; // vector of alpha parameters for J items

real mu_alpha; // mean of alpha parameters

real<lower=0> sigma_alpha; // standard deviation of alpha parameters

vector[I] theta; // vector of theta parameters for I individuals

}

model{

mu_b ~ normal(0,5);

sigma_b ~ normal(0,1);

b ~ normal(mu_b,sigma_b);

mu_a ~ normal(0,5);

sigma_a ~ normal(0,2.5);

a ~ normal(mu_a,sigma_a);

mu_alpha ~ normal(0,5);

sigma_alpha ~ cauchy(0,2.5);

alpha ~ normal(mu_alpha,sigma_alpha);

theta ~ normal(0,1); // The mean and variance of theta is fixed to 0 and 1

// for model identification

for(i in 1:N) {

Y[i] ~ normal(alpha[item[i]]*(theta[id[i]]-b[item[i]]),alpha[item[i]]/a[item[i]]);

}

}

Now we just compile the model and format our data:

mod <-

dat_stan <-

baker_list <- out_long %>%

%>%

We can then fit the model with our data.

fit <- mod$

Running MCMC with 4 parallel chains...

Chain 3 finished in 73.7 seconds.

Chain 4 finished in 79.1 seconds.

Chain 2 finished in 80.1 seconds.

Chain 1 finished in 111.4 seconds.

All 4 chains finished successfully.

Mean chain execution time: 86.1 seconds.

Total execution time: 111.5 seconds.

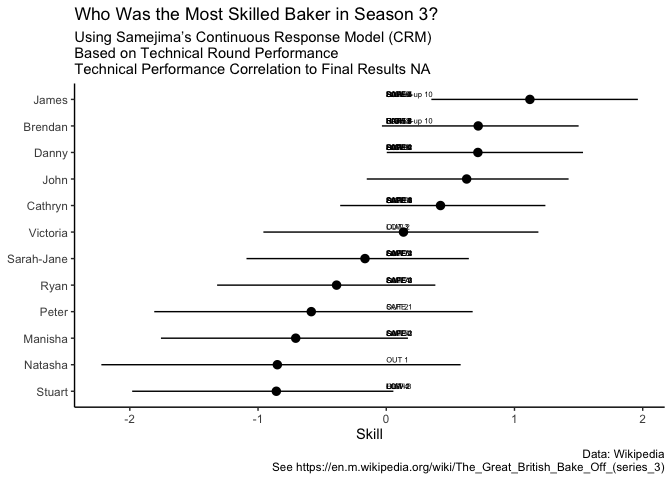

We’re interested here in theta which represents the ability of the

bakers.

combined_out <- fit$ %>%

%>%

out_come_with_rank <- combined_out %>%

%>%

%>%

%>%

%>%

knitr::

| outcome_modelled | baker | variable | value | round_num | perf | outcome_realized |

|---|---|---|---|---|---|---|

| 1 | James | 1 | SAFE | 1 | SAFE 1 | 12 |

| 1 | James | 2 | HIGH | 2 | HIGH 2 | 11 |

| 1 | James | 4 | SAFE | 4 | SAFE 4 | 9 |

| 1 | James | 5 | SAFE | 5 | SAFE 5 | 8 |

| 1 | James | 6 | LOW | 6 | LOW 6 | 7 |

| 1 | James | 7 | LOW | 7 | LOW 7 | 6 |

| 1 | James | 10 | Runner-up | 10 | Runner-up 10 | 2 |

| 2 | Brendan | 1 | SAFE | 1 | SAFE 1 | 12 |

| 2 | Brendan | 2 | HIGH | 2 | HIGH 2 | 11 |

| 2 | Brendan | 3 | LOW | 3 | LOW 3 | 10 |

| 2 | Brendan | 5 | HIGH | 5 | HIGH 5 | 8 |

| 2 | Brendan | 7 | HIGH | 7 | HIGH 7 | 6 |

| 2 | Brendan | 8 | SAFE | 8 | SAFE 8 | 5 |

| 2 | Brendan | 9 | HIGH | 9 | HIGH 9 | 4 |

| 2 | Brendan | 10 | Runner-up | 10 | Runner-up 10 | 2 |

| 3 | Danny | 1 | SAFE | 1 | SAFE 1 | 12 |

| 3 | Danny | 2 | SAFE | 2 | SAFE 2 | 11 |

| 3 | Danny | 3 | SAFE | 3 | SAFE 3 | 10 |

| 3 | Danny | 4 | HIGH | 4 | HIGH 4 | 9 |

| 3 | Danny | 5 | LOW | 5 | LOW 5 | 8 |

| 3 | Danny | 6 | HIGH | 6 | HIGH 6 | 7 |

| 3 | Danny | 8 | LOW | 8 | LOW 8 | 5 |

| 3 | Danny | 9 | OUT | 9 | OUT 9 | 4 |

| 4 | John | NA | NA | NA | NA | NA |

| 5 | Cathryn | 1 | HIGH | 1 | HIGH 1 | 12 |

| 5 | Cathryn | 2 | SAFE | 2 | SAFE 2 | 11 |

| 5 | Cathryn | 3 | SAFE | 3 | SAFE 3 | 10 |

| 5 | Cathryn | 4 | SAFE | 4 | SAFE 4 | 9 |

| 5 | Cathryn | 5 | SAFE | 5 | SAFE 5 | 8 |

| 5 | Cathryn | 6 | SAFE | 6 | SAFE 6 | 7 |

| 5 | Cathryn | 7 | LOW | 7 | LOW 7 | 6 |

| 5 | Cathryn | 8 | OUT | 8 | OUT 8 | 5 |

| 6 | Victoria | 2 | LOW | 2 | LOW 2 | 11 |

| 6 | Victoria | 3 | OUT | 3 | OUT 3 | 10 |

| 7 | Sarah-Jane | 1 | SAFE | 1 | SAFE 1 | 12 |

| 7 | Sarah-Jane | 2 | SAFE | 2 | SAFE 2 | 11 |

| 7 | Sarah-Jane | 3 | SAFE | 3 | SAFE 3 | 10 |

| 7 | Sarah-Jane | 4 | SAFE | 4 | SAFE 4 | 9 |

| 7 | Sarah-Jane | 5 | LOW | 5 | LOW 5 | 8 |

| 7 | Sarah-Jane | 6 | LOW | 6 | LOW 6 | 7 |

| 7 | Sarah-Jane | 7 | OUT | 7 | OUT 7 | 6 |

| 8 | Ryan | 1 | SAFE | 1 | SAFE 1 | 12 |

| 8 | Ryan | 2 | SAFE | 2 | SAFE 2 | 11 |

| 8 | Ryan | 3 | SAFE | 3 | SAFE 3 | 10 |

| 8 | Ryan | 4 | LOW | 4 | LOW 4 | 9 |

| 8 | Ryan | 6 | SAFE | 6 | SAFE 6 | 7 |

| 8 | Ryan | 7 | OUT | 7 | OUT 7 | 6 |

| 9 | Peter | 1 | SAFE | 1 | SAFE 1 | 12 |

| 9 | Peter | 2 | OUT | 2 | OUT 2 | 11 |

| 10 | Manisha | 1 | SAFE | 1 | SAFE 1 | 12 |

| 10 | Manisha | 2 | SAFE | 2 | SAFE 2 | 11 |

| 10 | Manisha | 3 | SAFE | 3 | SAFE 3 | 10 |

| 10 | Manisha | 4 | LOW | 4 | LOW 4 | 9 |

| 10 | Manisha | 5 | OUT | 5 | OUT 5 | 8 |

| 11 | Natasha | 1 | OUT | 1 | OUT 1 | 12 |

| 12 | Stuart | 1 | LOW | 1 | LOW 1 | 12 |

| 12 | Stuart | 2 | LOW | 2 | LOW 2 | 11 |

| 12 | Stuart | 3 | HIGH | 3 | HIGH 3 | 10 |

| 12 | Stuart | 4 | OUT | 4 | OUT 4 | 9 |

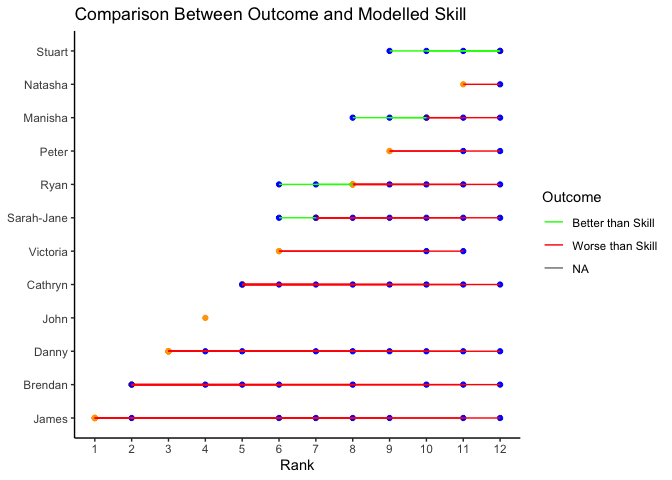

Now we can see what the correlation of ability performance is:

(correlation_analysis <-out_come_with_rank %>%

%>%

%>%

%>%

. %>%

)

[1] NA

Not too bad! It would seem that there is evidence that the performance in the technical is correlated with the final result (thank goodness).

combined_out %>%

+

+

+

+

+

+

->p

p

out_come_with_rank %>%

%>%

%>%

+

+

+

+

+

+

+

->p2

p2

Next Steps

This analysis only covers one season. It would be neat to come back and do all of the seasons to get a feel for the level of difficulty of the different rounds (i.e., was the technical in round 3 of similar difficulty in each season). Additionally it would be neat to see if this relationship between the technical score and final outcome held up in each season.

Citation

BibTex citation:

@online{dewitt2021

author = {Michael E. DeWitt},

title = {How Discerning is the Technical Challenge in GBBO?},

date = 2021-09-23,

url = {https://michaeldewittjr.com/articles/2021-09-23-how-discerning-is-the-technical-challenge-in-gbbo},

langid = {en}

}

For attribution, please cite this work as:

Michael E. DeWitt. 2021. "How Discerning is the Technical Challenge in GBBO?." September 23, 2021. https://michaeldewittjr.com/articles/2021-09-23-how-discerning-is-the-technical-challenge-in-gbbo